Biwi Kinect Head Pose Database

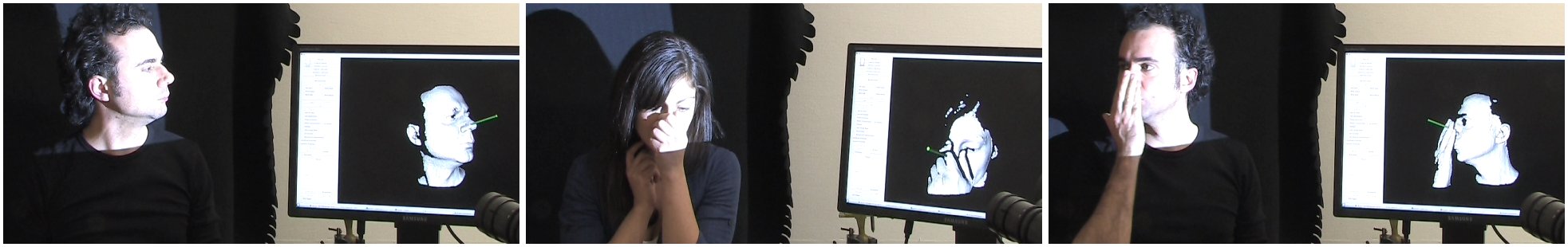

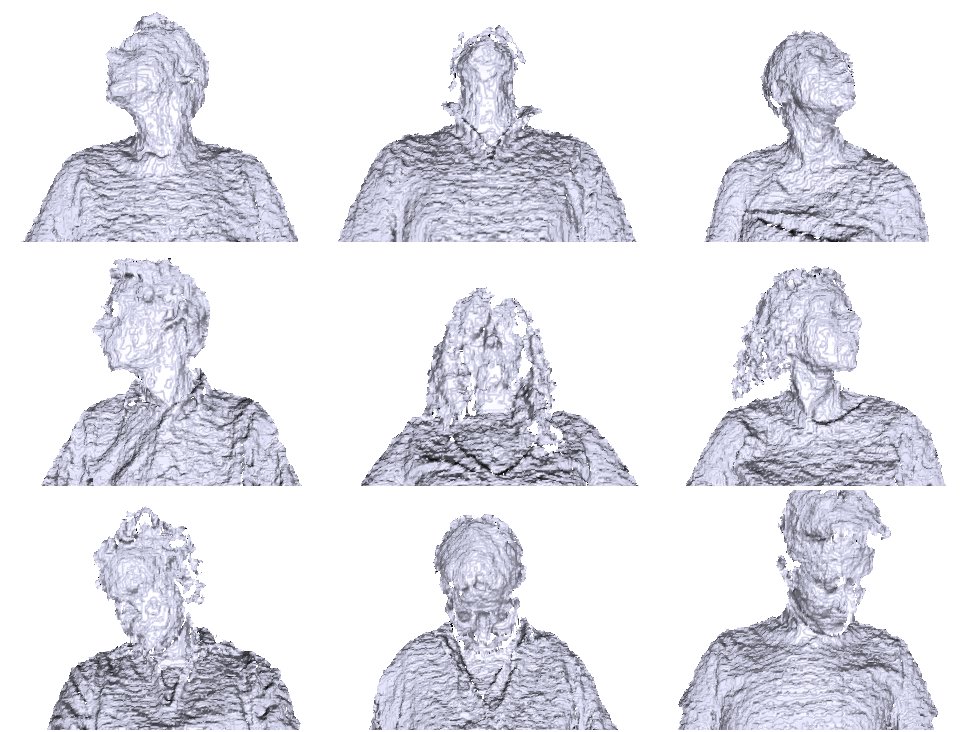

Because cheap consumer devices (e.g., Kinect) acquire row-resolution, noisy depth data, we could not train our algorithm on clean, synthetic images as was done in our previous CVPR work. Instead, we recorded several people sitting in front of a Kinect (at about one meter distance). The subjects were asked to freely turn their head around, trying to span all possible yaw/pitch angles they could perform.

To be able to evaluate our real-time head pose estimation system, the sequences were annotated using the automatic system of www.faceshift.com, i.e., each frame is annotated with the center of the head in 3D and the head rotation angles.

The dataset contains over 15K images of 20 people (6 females and 14 males - 4 people were recorded twice). For each frame, a depth image, the corresponding rgb image (both 640x480 pixels), and the annotation is provided. The head pose range covers about +-75 degrees yaw and +-60 degrees pitch. Ground truth is provided in the form of the 3D location of the head and its rotation.

Even though our algorithms work on depth images alone, we provide the RGB images as well. Please note that this is a database acquired with frame-by-frame estimation in mind, not tracking. For this reason, some frames are missing.

The database is made available for research purposes only. You are required to cite our work whenever publishing anything directly or indirectly using the data:

@article{fanelli_IJCV,

author = {Fanelli, Gabriele and Dantone, Matthias and Gall, Juergen and Fossati, Andrea and Van Gool, Luc},

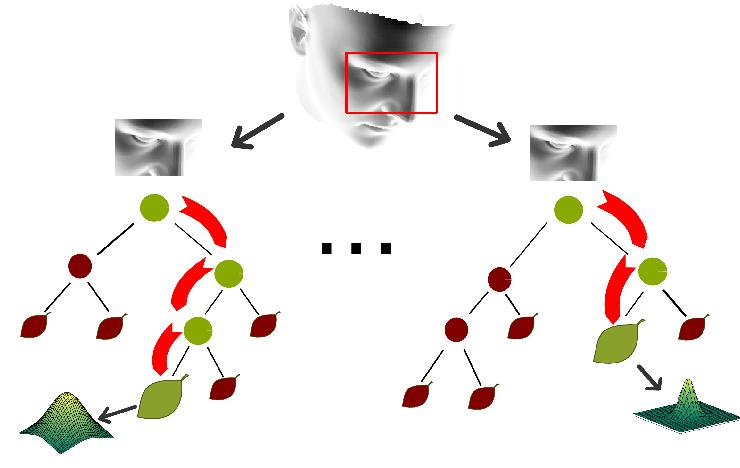

title = {Random Forests for Real Time 3D Face Analysis},

journal = {Int. J. Comput. Vision},

year = {2013},

month = {February},

volume = {101},

number = {3},

pages = {437--458}

}

Files:

Data (5.6 GB, .tgz compressed)

Binary ground truth files

Masks used to select positive patches

Readme file

Sample code for reading depth images and ground truth

|

|